Links

Preview!

First of all I cannot overstate that Blob Index is preview as of the time this is written. This means that there are many restrictions as well as possible breaking changes or even a chance that the complete feature will not make it to the final product. However it’s promissing already and thats why my article.

What problem is solved?

Storage accounts are one of the most used feature of Azure. One common scenario here is to simply store files or BLOBs (binary large objects) in containers there. This is pretty performant as long as you have 2 main use cases: the first one is storing a file and the second one is retrieving the data when you now the URL of it exactly.

Another important case would be of cause to filter and list BLOBs on different criteria. Well, thats not performant at all and thats where the new Blob Index wants to kick in. It was always necessary to bring in alternatives like Azure Search for those kind of jobs.

It gives us the ability to store key-value-paired information alongside with the data which is then transformed into a performant index. There was always a way to store metadata for BLOBs but it was not possible to perform queries against them. One could only read out this meta-information for a single BLOB and then do whatever he wants to do with it. Although this is still possible and has it’s use cases we want to filter for certain BLOBs in a fast way and thats what Blob Index promises us.

Some more details

Currently only strings are supported as values for index-keys. The key has to be enclosed in double-quotes when used in a query and the value will be passed in single-quotes.

After the key-value-pairs are sent to Azure Storage a multi-dimensional index is created (I don’t know anything about the actual technology but I guess it’s Azure Search) and is ready after a few moments (I also don’t know how long it takes to build/rebuild the index).

Although only strings are allowed as values the index can handle timestamps as well if they are formatted in ISO 8601 - in other words something like 2007-08-31T16:47+00:00 - in other words the JSON default. Numbers are treated as strings.

Currently only 10 keys per BLOB are allowed and the are limited to 768 bytes per entry. The keys and values are treated case-sensitive.

Another pretty important point is that index tags (key-value pairs) come with a price. According to the docu Microsoft counts the average amount of index tags per month and bills you that. You will pay $0.015 for 10,000 index tags.

Getting started

What you need is an Azure subscription and the possibility to create ressources in one of the following Azure Regions:

- Canada Central

- Canada East

- France Central

- Frane South

Please don’t ask me about this selection of Microsoft. Either those are the most risk-free regions or the team has some weird french-speaking background. Anyways, the storage account has to be situated in one of those regions.

Before you begin to create the ressources you have to register the feature in Azure. Here are the samples to do it in PowerShell and Azure CLI:

REMEMBER TO SELECT THE CORRECT SUBSCRIPTION BEFORE YOU APPLY THIS!

Register-AzProviderFeature -FeatureName BlobIndex -ProviderNamespace Microsoft.Storage

Register-AzResourceProvider -ProviderNamespace Microsoft.Storageaz feature register --namespace Microsoft.Storage --name BlobIndex

az provider register --namespace 'Microsoft.Storage'If you (like me) want to lay your hands on the feature using .NET you should add the following NuGet source to your installation:

https://azuresdkartifacts.blob.core.windows.net/azure-sdk-for-net/index.json(In Visual Studio your would go to Tools -> Options -> NuGet Package Manager -> Package Sources and add one for instance).

Once you’ve completed or ensured all these steps you’re good to go.

Creating the storage account

All you need is a default storage account of the kind StorageV2 (general purpose v2) (which is the default kind currently).

Using Azure CLI this would to the job:

az storage account create -g [RESOURCE-GROUP] -n [STORAGE-ACCOUNT-NAME] --location "France Central"Uploading data with C#

I wanted to keep this sample simple and collected all the source code in the Program.cs of a simple .NET Core Console application. So

dotnet new consoleshould do the trick for you.

Lets start with uploading indexed BLOBs. Here is the stuff I need in Program.cs:

static readonly string ContainerName = "test";

static readonly int ItemsPerMonth = 1000;

static async Task Main(string[] args)

{

await PerformUploadAsync();

Console.WriteLine("Upload complete.");

}

static async Task PerformUploadAsync()

{

for (var month = 1; month <= 12; month++)

{

Console.WriteLine($"Uploading blobs with index 'month' = '{month}'...");

var index = new Dictionary<string, string> { { "month", month.ToString() } };

for (var i = 1; i <= ItemsPerMonth; i++)

{

await UploadIndexBlobAsync($"month-{month}-{i:0000}.test", index);

Trace.TraceInformation($"Uploaded {i} for month {month}.");

}

}

}

static async Task UploadIndexBlobAsync(string blobName, Dictionary<string, string> indexValues)

{

var client = GetContainerClient();

await client.CreateIfNotExistsAsync();

var appendClient = client.GetAppendBlobClient(blobName);

var options = new CreateAppendBlobOptions

{

Tags = indexValues

};

await appendClient.CreateAsync(options).ConfigureAwait(false);

}

static BlobServiceClient GetServiceClient()

{

if (_serviceClient == null)

{

_serviceClient = new BlobServiceClient("[YOUR STORAGE CONNECTION STRING]");

}

return _serviceClient;

}

static BlobContainerClient GetContainerClient()

{

if (_client == null)

{

var serviceClient = GetServiceClient();

_client = serviceClient.GetBlobContainerClient(ContainerName);

}

return _client;

}The main fun happens in UploadIndexBlobAsync. This method gets a container client and then ensures that the desired container exists. After this it uses a special AppendBlobClient which allows us to not only create the BLOB but also to pass in the options. As you can see, I want to store 2 kinds of information: the “old” metadata and the “new” tags (aka Blob Index data).

I want to perform a more realistic test so I create 12,000 BLOBs. I create 1,000 elements for each “month” which is represented by a number. Then I create 1 empty file for each of the 1,000 entries and index them with the key “month”.

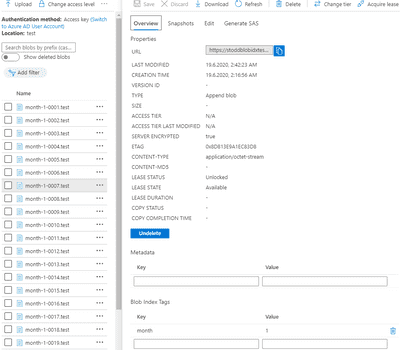

In order to allow you to stay with me here is a screenshot from the Azure Portal showing the resulting index values:

However, the documentation tells us that the indexing operation may take a while. So lets examine the source code for querying the BLOBs meanwhile.

Querying the data with C#

Here is my test method:

private static async Task PerformQueryAsync()

{

var results = new List<FilterBlobItem>();

var client = GetServiceClient();

var query = @$"@container = '{ContainerName}' AND ""month"" = '3'";

Console.WriteLine("Searching for all blobs with month 3...");

var stopWatch = new Stopwatch();

stopWatch.Start();

for (var i = 1; i <= 10; i++)

{

results.Clear();

await foreach (var filterBlobItem in client.FindBlobsByTagsAsync(query).ConfigureAwait(false))

{

results.Add(filterBlobItem);

}

}

stopWatch.Stop();

Console.WriteLine($"Found {results.Count} in {stopWatch.ElapsedMilliseconds / 10} ms average.");

}On my machine this results in

Searching for all blobs with month 3...

Found 1000 in 119 ms average.To be precise - the BLOBs-information (not the actual BLOB itself) is available locally after this timespan so we could traverse it in our code!

If we change the query to

var query = @$"""month"" = '3'";we could search for BLOBs in all containers available in the storage account.

This is not possible:

// WILL NOT WORK!!!

var query = @$"""month"" >= '1' AND ""month"" <= '3'";because Blob Index does not have a clue that month is a number. This is only valid with timestamp like this (sample taken from Microsoft):

// WILL WORK

var query = @$"""myTimeStamp"" >= '2020-04-20' AND ""myTimeStamp"" <= '2020-04-30'";Summary

I think this look pretty promising! I could have used this plenty of times in my projects because traversing all the files and then reading out the metadata is far too slow when you have several thousends of BLOBs which isn’t unusual.

I like the approch and I think the costs are fair. Let’s see how this evolves over time!